Context Graphs, Data Traces & Transcripts

Some thoughts on the latest buzzwords

I’m seeing the phrase "context graphs" appearing in my feed lately. Either a Gartner deck landed somewhere, or the LLM crowd is trying out a new buzzword of late, trying to get ahead of the whole graph thing. Not sure, but I’ve got a few thoughts here.

The Not So Lowly Meeting Minutes

A knowledge graph is a valuable tool; it’s my business, I stand by them, and I see them as a critical part of AI infrastructure moving forward. However, the central idea of context graphs is that you should be able to determine exactly when a decision was made and why, and that is something that a knowledge graph by itself can only give you VERY indirectly, even with RDF-Star annotations and SHACL and all that. This is because the knowledge graph may be able to infer the existence of a decision from the data it contains, but it really has no context for what the decision was or why it was made, if you are attempting to gain context from forensics.

The reason for this is simple. Most decisions in organisations are made by people, almost invariably within meetings. Sometimes those meetings are pro forma: the CEO wants to start an initiative but also wants to get buy-in from his reports; the project manager wants to assign a new task to a programmer; a contract artist meets with a creative director to discuss new content. Nonetheless, such decisions typically involve one or more individuals accepting a contract or role from another party to perform specific actions within specified criteria and constraints.

For a long time, the sticking point was capturing when those decisions were made, by whom, when, and how. Stenography, also known as shorthand, was invented primarily because human speech typically exceeds the speed at which someone can write in longhand. Well into the 1960s, most meetings were captured (sometimes ambiguously) by stenographers as part of a secretary’s training. The advent of portable tape recorders in the 1960s mostly obsoleted this role, with the emphasis switching from scribbling furiously at a meeting or capturing the meeting afterwards by using a combination of treadle-controlled tape recorders and a typewriter to create a transcription of the proceedings, the paper output of the recording then becoming known as a transcript.

Fast forward to the early 2020s. While teleconferencing systems such as Webex had been around for a few years, COVID forced a rapid upgrade in network capacity to handle large numbers of participants at low cost, and led to a surge in such services, including Microsoft Teams, Zoom, and Google Meet. This also spurred the development of AI (e.g., OtterAI) for intelligent transcription services that can unambiguously identify who is speaking, what they are saying, and whether trigger words or phrases are present to initiate specific actions. Chat became an important secondary stream and may have been an impetus for the development of chat systems and, subsequently, GenAI.

Creating Context Graphs

What was most important here was the establishment of a mechanism to automate meeting note-taking. This seemingly simple jump may have subtle but long-term ramifications. One of the major problems with most transcriptions is that they remain archival records only and are seldom actively processed. People may return to review them for research, but most are buried as files on hard drives and are never seen again. However, the same AI that generated the transcript in the first place may now be one to turn those same notes into context graphs.

Transcripts have a great deal of metadata - who attended a meeting, what was said, where and when it took place, who was speaking at any given time, and so forth. Beyond this, the conversation itself has a deep narrative context that can be mined, including what was discussed, which positions were taken, arguments for and against ideas, which decisions were proposed and rejected, and who ultimately made those decisions.

The following gives an idea about what such a transcript may look like (AI Generated):

SAMPLE MEETING TRANSCRIPT

=========================

Meeting: Q1 2026 Product Strategy Review

Date: January 11, 2026

Time: 10:00 AM - 11:30 AM PST

Location: Microsoft Teams (Meeting ID: 123-456-789)

Organizer: sarah.johnson@example.com

ATTENDEES:

- Sarah Johnson (sarah.johnson@example.com) - VP of Product, Meeting Organizer

- Michael Chen (michael.chen@example.com) - Senior Product Manager

- Jennifer Martinez (jennifer.martinez@example.com) - Engineering Lead

- David Park (david.park@example.com) - Data Scientist

- Lisa Anderson (lisa.anderson@example.com) - UX Designer

---

[10:02 AM] Sarah Johnson: Good morning everyone, thanks for joining. Let's get started. We have three main items on the agenda today: first, the proposed AI feature integration; second, the Q1 budget allocation; and third, timeline for the mobile app redesign. Let's start with the AI feature proposal. Michael, you're leading this one.

[10:03 AM] Michael Chen: Thanks Sarah. So, we've been evaluating several large language models for integration into our platform. Based on our analysis, I'm proposing we adopt Claude by Anthropic for our customer support automation. The API costs are reasonable, and the quality of responses is superior to alternatives we tested.

[10:04 AM] Jennifer Martinez: I have concerns about the infrastructure requirements. Do we have the capacity to handle the API load during peak hours? Our current architecture might need significant upgrades.

[10:05 AM] David Park: Jennifer raises a good point. I ran some projections using our current traffic data. At peak load, we're looking at approximately 500 API calls per minute. That's well within Anthropic's rate limits, but we'd need to implement proper caching and fallback mechanisms.

[10:06 AM] Sarah Johnson: What's the estimated cost impact, David?

[10:06 AM] David Park: Based on Claude's pricing, we're looking at roughly $8,000 per month initially, scaling to maybe $15,000 as adoption grows. That's about 30% less than our current human support costs for similar volume.

[10:07 AM] Lisa Anderson: From a UX perspective, I'd like us to ensure we're transparent with users about when they're interacting with AI versus human agents. We should follow ethical AI principles here.

[10:08 AM] Michael Chen: Absolutely agreed. I propose we implement a clear disclosure system and give users the option to escalate to human support at any time.

[10:09 AM] Sarah Johnson: Okay, let me propose this formally: We adopt Claude for customer support automation with the following conditions - implement proper disclosure, provide human escalation options, and Jennifer's team gets two weeks to assess and document infrastructure requirements before we proceed. Can we vote on this?

[10:09 AM] Michael Chen: I vote in favor.

[10:10 AM] Jennifer Martinez: I vote in favor, with the caveat that we delay launch if infrastructure concerns aren't resolved.

[10:10 AM] David Park: In favor.

[10:10 AM] Lisa Anderson: In favor.

[10:11 AM] Sarah Johnson: That's unanimous. Motion passes. Michael, you'll coordinate with Jennifer's team on the infrastructure assessment. Next item - Q1 budget allocation. We have $250,000 to allocate across three initiatives: the AI integration we just approved, the mobile redesign, and a new data analytics dashboard. David, you had a proposal for the analytics dashboard?

[10:12 AM] David Park: Yes. I'm requesting $80,000 for a comprehensive analytics dashboard that would integrate with our data warehouse. This would use tools like Apache Superset or Tableau for visualization. The dashboard would track key metrics including user engagement, conversion rates, and feature adoption.

[10:13 AM] Michael Chen: I think the analytics dashboard is important, but that's nearly a third of our budget. Could we start with a smaller proof-of-concept first?

[10:14 AM] David Park: We could, but we'd lose some of the integration benefits. A proof-of-concept would be maybe $30,000, but we'd likely need to rebuild significant portions when we scale up.

[10:15 AM] Jennifer Martinez: What if we phased it? Start with core metrics for $40,000, then expand based on actual usage and value demonstrated?

[10:15 AM] David Park: That could work. We'd focus initially on customer journey analytics and revenue attribution.

[10:16 AM] Sarah Johnson: I like the phased approach. Let's allocate $40,000 for phase one of the analytics dashboard. That leaves $210,000 for AI integration and mobile redesign. Michael, what's your budget need for the AI project?

[10:17 AM] Michael Chen: Including development, testing, and the first six months of API costs, we're looking at $90,000.

[10:17 AM] Sarah Johnson: That leaves $120,000 for mobile redesign. Lisa, is that sufficient?

[10:18 AM] Lisa Anderson: It's tight, but manageable. We'd planned for $150,000, so we'd need to cut some features or delay the iOS version to focus on Android first.

[10:19 AM] Michael Chen: Could we potentially table the analytics dashboard and allocate that budget to mobile? The dashboard, while useful, isn't customer-facing.

[10:20 AM] David Park: I disagree strongly. Without proper analytics, we're flying blind. We won't know if the AI integration or mobile redesign are actually successful. This is a strategic investment in decision-making capability.

[10:21 AM] Sarah Johnson: David makes a valid point about measurement. Let me propose an alternative - we approve the analytics dashboard at $40,000 and the AI integration at $90,000 as discussed. For mobile, we allocate $120,000 with the understanding that Lisa's team will prioritize features and potentially release in phases. Can we vote on this budget allocation?

[10:22 AM] Jennifer Martinez: Before we vote, I want to ensure we're holding back some contingency. These projects typically run over budget.

[10:22 AM] Sarah Johnson: Good point. Let's hold back $20,000 as contingency. So: $40,000 analytics, $85,000 AI integration, $105,000 mobile redesign, $20,000 contingency. Total: $250,000.

[10:23 AM] Michael Chen: I vote in favor of this allocation.

[10:23 AM] Jennifer Martinez: In favor.

[10:23 AM] David Park: In favor.

[10:24 AM] Lisa Anderson: I vote in favor, though I want it noted that the mobile timeline may slip as a result.

[10:24 AM] Sarah Johnson: Noted. Motion passes. Now, final agenda item - the mobile app redesign timeline. Lisa, walk us through your proposal.

[10:25 AM] Lisa Anderson: We're planning a complete overhaul of the mobile experience. Current users rate us 3.2 stars on average, primarily due to navigation issues and slow performance. The redesign addresses these concerns with a new information architecture and optimized code. We're targeting a 4.0+ star rating post-launch.

[10:26 AM] Lisa Anderson: With the $105,000 budget, I'm proposing a phased rollout. Phase 1 would be Android only, launching in April. Phase 2 would be iOS, launching in June. This gives us time to incorporate user feedback from Android before iOS release.

[10:27 AM] Jennifer Martinez: From an engineering perspective, the April timeline for Android is aggressive but doable if we start immediately. We'd need to allocate three developers full-time.

[10:28 AM] Michael Chen: What about beta testing? Are we factoring in time for that?

[10:28 AM] Lisa Anderson: Yes, we'd do a two-week beta with select users in March for Android.

[10:29 AM] David Park: Can we tie this to the analytics dashboard? I'd like to have proper tracking in place before we launch so we can measure impact accurately.

[10:29 AM] Sarah Johnson: That's a good point. Jennifer, can your team coordinate timing so analytics tracking is deployed before mobile launch?

[10:30 AM] Jennifer Martinez: Yes, we can prioritize the analytics infrastructure for March completion.

[10:31 AM] Sarah Johnson: Excellent. Any other concerns about the mobile timeline?

[10:32 AM] Michael Chen: Just one - should we consider holding this project until Q2 given our budget constraints? We're already stretching resources thin.

[10:33 AM] Lisa Anderson: I'd argue against that. Our mobile ratings are hurting app store visibility. Every month we delay costs us potential users. The negative reviews are starting to mention competitors by name, particularly Notion and Asana.

[10:34 AM] David Park: Lisa's right. I've been tracking app store sentiment, and we're losing ground. This is urgent.

[10:35 AM] Sarah Johnson: Okay, I'm not calling for a vote on this since we're not making a decision, just approving a timeline. Unless there are objections, we'll proceed with Lisa's phased approach - Android in April, iOS in June. Speak now if you object.

[10:36 AM] Michael Chen: No objection, but I want to schedule a checkpoint meeting in February to assess progress.

[10:36 AM] Sarah Johnson: Agreed. Jennifer, can you schedule that?

[10:37 AM] Jennifer Martinez: Will do.

[10:37 AM] Sarah Johnson: Alright, before we wrap up, let me summarize our decisions. First, we're adopting Claude for customer support with proper disclosure and human escalation. Second, budget is allocated as follows: $40,000 analytics, $85,000 AI integration, $105,000 mobile, $20,000 contingency. Third, mobile redesign proceeds with Android in April and iOS in June. Action items: Michael coordinates with Jennifer on AI infrastructure assessment, Jennifer schedules February checkpoint meeting, and David begins Phase 1 analytics dashboard planning. Anything else?

[10:38 AM] David Park: One quick item - I'd like to propose we standardize on Python for all data science work. Currently we have a mix of R and Python, and it's creating maintenance issues.

[10:39 AM] Sarah Johnson: That's a good point, but we're out of time today. Let's table that discussion for our next meeting. Add it to the agenda.

[10:39 AM] David Park: Will do.

[10:40 AM] Sarah Johnson: Thanks everyone. Meeting adjourned.

[Meeting ends: 10:40 AM]There are a few meeting transcription ontologies (which are different from RNA transcription ontologies, which have nothing to do with meetings and everything to do with genetic engineering), and none using SHACL. Fortunately, it was fairly easy to generate such an ontology using Claude that handled most of the basics, using the prompt:

ORIGINAL PROJECT PROMPT

========================

Using https://www.w3.org/TR/shacl12-core/, create a comprehensive SHACL ontology for converting meeting transcriptions into RDF summaries.

This would need to handle:

- Determining speakers (use emails as a primary identifier)

- When a comment is made

- Where a meeting occurs (assume an online address tied into a telepresence account such as Teams or Google Meet)

- Who called the meeting

- And so forth

It also will need to analyse text to determine:

- Intent of statements

- Any discussion and resolutions

- Tabling, or voting down specific decisions

- Topical intent

- Any specific entity extraction that can be used with an active taxonomy (assume Wikidata as a taxonomy model)

The intent of this schema is to create a foundation for tracing decision making through an organisation.

Requirements:

- Use RDF-Star liberally to simplify structuring

- Assume both a timestamp, current relative time and xsd:dateTime indicator for tracking time

- This is a foundational schema, but can incorporate SHACL rules as necessary for computational purposes

Deliverables:

1. Generate the SHACL schema

2. Create a sample transcript showing a few different decision making and discussion scenarios

3. Using the derived SHACL schema, generate a corresponding RDF Turtle file holding that scenario as RDF in the associated schema

Context:

- User works primarily with W3C RDF stack - Turtle, SPARQL, SHACL

- User is a developer working typically in conjunction with either NodeJS or Python

- User is typically looking to develop conversion and parsing tools

This created the SHACL file, which, for purposes of discussion, I’ll let you, gentle reader, check out on your own.

This SHACL file is then used internally to prompt the creation of an output Turtle file that converts the transcript into RDF. A sample from this file can be seen as:

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

@prefix xsd: <http://www.w3.org/2001/XMLSchema#> .

@prefix dcterms: <http://purl.org/dc/terms/> .

@prefix foaf: <http://xmlns.com/foaf/0.1/> .

@prefix schema: <http://schema.org/> .

@prefix prov: <http://www.w3.org/ns/prov#> .

@prefix wd: <http://www.wikidata.org/entity/> .

@prefix wdt: <http://www.wikidata.org/prop/direct/> .

@prefix mt: <http://example.org/meeting-transcript/> .

@prefix mto: <http://example.org/meeting-transcript/ontology#> .

# ============================================================================

# MEETING METADATA

# ============================================================================

mt:meeting-20260111 a mto:Meeting ;

dcterms:identifier "meeting-20260111-product-strategy" ;

dcterms:title "Q1 2026 Product Strategy Review" ;

mto:scheduledStartTime "2026-01-11T10:00:00-08:00"^^xsd:dateTime ;

mto:actualStartTime "2026-01-11T10:02:00-08:00"^^xsd:dateTime ;

mto:actualEndTime "2026-01-11T10:40:00-08:00"^^xsd:dateTime ;

mto:duration "PT38M"^^xsd:duration ;

mto:organizer mt:participant-sarah ;

mto:location mt:location-teams-123456789 ;

mto:participant mt:participant-sarah,

mt:participant-michael,

mt:participant-jennifer,

mt:participant-david,

mt:participant-lisa ;

mto:hasAgendaItem mt:agenda-item-1,

mt:agenda-item-2,

mt:agenda-item-3 ;

mto:hasDecision mt:decision-ai-adoption,

mt:decision-budget-allocation,

mt:decision-mobile-timeline ;

mto:hasTranscript mt:transcript-20260111 .

# ============================================================================

# LOCATION

# ============================================================================

mt:location-teams-123456789 a mto:VirtualLocation ;

mto:platform "Teams" ;

mto:meetingId "123-456-789" ;

mto:accountId "example-company-teams" .

# ============================================================================

# PARTICIPANTS

# ============================================================================

mt:participant-sarah a mto:Participant ;

foaf:mbox "sarah.johnson@example.com" ;

foaf:name "Sarah Johnson" ;

schema:jobTitle "VP of Product" ;

mto:role "chair" ;

mto:attendanceStatus "present" .

mt:participant-michael a mto:Participant ;

foaf:mbox "michael.chen@example.com" ;

foaf:name "Michael Chen" ;

schema:jobTitle "Senior Product Manager" ;

mto:role "presenter" ;

mto:attendanceStatus "present" .

mt:participant-jennifer a mto:Participant ;

foaf:mbox "jennifer.martinez@example.com" ;

foaf:name "Jennifer Martinez" ;

schema:jobTitle "Engineering Lead" ;

mto:role "attendee" ;

mto:attendanceStatus "present" .

mt:participant-david a mto:Participant ;

foaf:mbox "david.park@example.com" ;

foaf:name "David Park" ;

schema:jobTitle "Data Scientist" ;

mto:role "presenter" ;

mto:attendanceStatus "present" .

mt:participant-lisa a mto:Participant ;

foaf:mbox "lisa.anderson@example.com" ;

foaf:name "Lisa Anderson" ;

schema:jobTitle "UX Designer" ;

mto:role "presenter" ;

mto:attendanceStatus "present" .

# ============================================================================

# AGENDA ITEMS

# ============================================================================

mt:agenda-item-1 a mto:AgendaItem ;

dcterms:identifier "agenda-1-ai-integration" ;

dcterms:title "AI Feature Integration Proposal" ;

dcterms:description "Evaluation and decision on adopting Claude by Anthropic for customer support automation" ;

mto:sequenceNumber 1 ;

mto:presenter mt:participant-michael ;

mto:startTime "2026-01-11T10:03:00-08:00"^^xsd:dateTime ;

mto:endTime "2026-01-11T10:11:00-08:00"^^xsd:dateTime ;

mto:relatedDecision mt:decision-ai-adoption ;

mto:topicClassification wd:Q11660 ; # Artificial intelligence

mto:status "decided" .

# ============================================================================

# UTTERANCES (Sample - showing key utterances)

# ============================================================================

mt:utterance-001 a mto:Utterance ;

dcterms:identifier "utt-001" ;

mto:speaker mt:participant-sarah ;

mto:timestamp "2026-01-11T10:02:00-08:00"^^xsd:dateTime ;

mto:relativeTime "PT0S"^^xsd:duration ;

mto:sequenceNumber 1 ;

rdf:value "Good morning everyone, thanks for joining. Let's get started. We have three main items on the agenda today: first, the proposed AI feature integration; second, the Q1 budget allocation; and third, timeline for the mobile app redesign. Let's start with the AI feature proposal. Michael, you're leading this one." ;

mto:intent mt:intent-001 ;

mto:relatedToAgenda mt:agenda-item-1 ;

mto:sentiment "neutral" .

mt:intent-001 a mto:Intent ;

mto:intentType "procedural" ;

mto:confidence 0.95 ;

mto:topic "meeting-opening" .

mt:utterance-002 a mto:Utterance ;

dcterms:identifier "utt-002" ;

mto:speaker mt:participant-michael ;

mto:timestamp "2026-01-11T10:03:00-08:00"^^xsd:dateTime ;

mto:relativeTime "PT1M"^^xsd:duration ;

mto:sequenceNumber 2 ;

rdf:value "Thanks Sarah. So, we've been evaluating several large language models for integration into our platform. Based on our analysis, I'm proposing we adopt Claude by Anthropic for our customer support automation. The API costs are reasonable, and the quality of responses is superior to alternatives we tested." ;

mto:intent mt:intent-002 ;

mto:relatedToAgenda mt:agenda-item-1 ;

mto:mentionsEntity wd:Q126418099, # Claude (AI assistant)

wd:Q108266254 ; # Anthropic

mto:sentiment "positive" .

mt:intent-002 a mto:Intent ;

mto:intentType "proposal" ;

mto:confidence 0.98 ;

mto:topic "ai-integration" .

mt:entity-mention-001 a mto:EntityMention ;

mto:mentionedIn mt:utterance-002 ;

mto:entityUri wd:Q126418099 ;

mto:entityLabel "Claude (AI assistant)" ;

mto:entityType wd:Q11660 ; # AI type

mto:textSpan "Claude by Anthropic" ;

mto:confidence 0.95 .

# ============================================================================

# DISCUSSIONS

# ============================================================================

mt:discussion-ai-integration a mto:Discussion ;

dcterms:identifier "disc-ai-integration" ;

mto:topic "AI Feature Integration for Customer Support" ;

mto:startedAt "2026-01-11T10:03:00-08:00"^^xsd:dateTime ;

mto:endedAt "2026-01-11T10:11:00-08:00"^^xsd:dateTime ;

mto:includesUtterance mt:utterance-002,

mt:utterance-003,

mt:utterance-004,

mt:utterance-005,

mt:utterance-006 ;

mto:participants mt:participant-michael,

mt:participant-jennifer,

mt:participant-david,

mt:participant-lisa,

mt:participant-sarah ;

mto:consensus "unanimous" ;

mto:summary "Team unanimously agreed to adopt Claude for customer support automation with conditions for disclosure, human escalation, and infrastructure assessment." .

mt:discussion-budget a mto:Discussion ;

dcterms:identifier "disc-budget" ;

mto:topic "Q1 Budget Allocation Strategy" ;

mto:startedAt "2026-01-11T10:11:00-08:00"^^xsd:dateTime ;

mto:endedAt "2026-01-11T10:24:00-08:00"^^xsd:dateTime ;

mto:includesUtterance mt:utterance-011,

mt:utterance-012,

mt:utterance-013 ;

mto:participants mt:participant-david,

mt:participant-michael,

mt:participant-jennifer,

mt:participant-sarah ;

mto:consensus "majority" ;

mto:summary "Budget allocated with phased approach for analytics dashboard, reduced AI integration budget, and constrained mobile redesign budget with contingency fund." .

# ============================================================================

# ACTION ITEMS

# ============================================================================

mt:action-infrastructure-assessment a mto:ActionItem ;

dcterms:identifier "action-infra-assess" ;

dcterms:title "AI Infrastructure Assessment" ;

mto:assignedTo mt:participant-jennifer, mt:participant-michael ;

mto:dueDate "2026-01-25T17:00:00-08:00"^^xsd:dateTime ;

mto:priority "high" ;

mto:status "pending" .

mt:action-analytics-planning a mto:ActionItem ;

dcterms:identifier "action-analytics-plan" ;

dcterms:title "Begin Phase 1 Analytics Dashboard Planning" ;

mto:assignedTo mt:participant-david ;

mto:dueDate "2026-01-18T17:00:00-08:00"^^xsd:dateTime ;

mto:priority "medium" ;

mto:status "pending" .

# ============================================================================

# RDF-STAR ANNOTATIONS

# ============================================================================

# Annotating key decision provenance with RDF-Star

<<mt:decision-ai-adoption mto:decisionType "approved">>

prov:wasAttributedTo mt:participant-sarah ;

dcterms:created "2026-01-11T10:11:00-08:00"^^xsd:dateTime ;

mto:confidence 1.0 ;

mto:sourceUtterance mt:utterance-006 .

<<mt:decision-ai-adoption mto:rationale "Claude provides superior response quality at reasonable cost (estimated $8-15K/month), 30% less than current human support costs. Infrastructure capacity confirmed at 500 API calls/minute within rate limits. Ethical considerations addressed through disclosure and human escalation options.">>

prov:wasAttributedTo mt:participant-michael, mt:participant-david, mt:participant-lisa ;

dcterms:created "2026-01-11T10:11:00-08:00"^^xsd:dateTime ;

mto:confidence 0.95 .

<<mt:decision-budget-allocation mto:decisionType "approved">>

prov:wasAttributedTo mt:participant-sarah ;

dcterms:created "2026-01-11T10:24:00-08:00"^^xsd:dateTime ;

mto:confidence 1.0 .

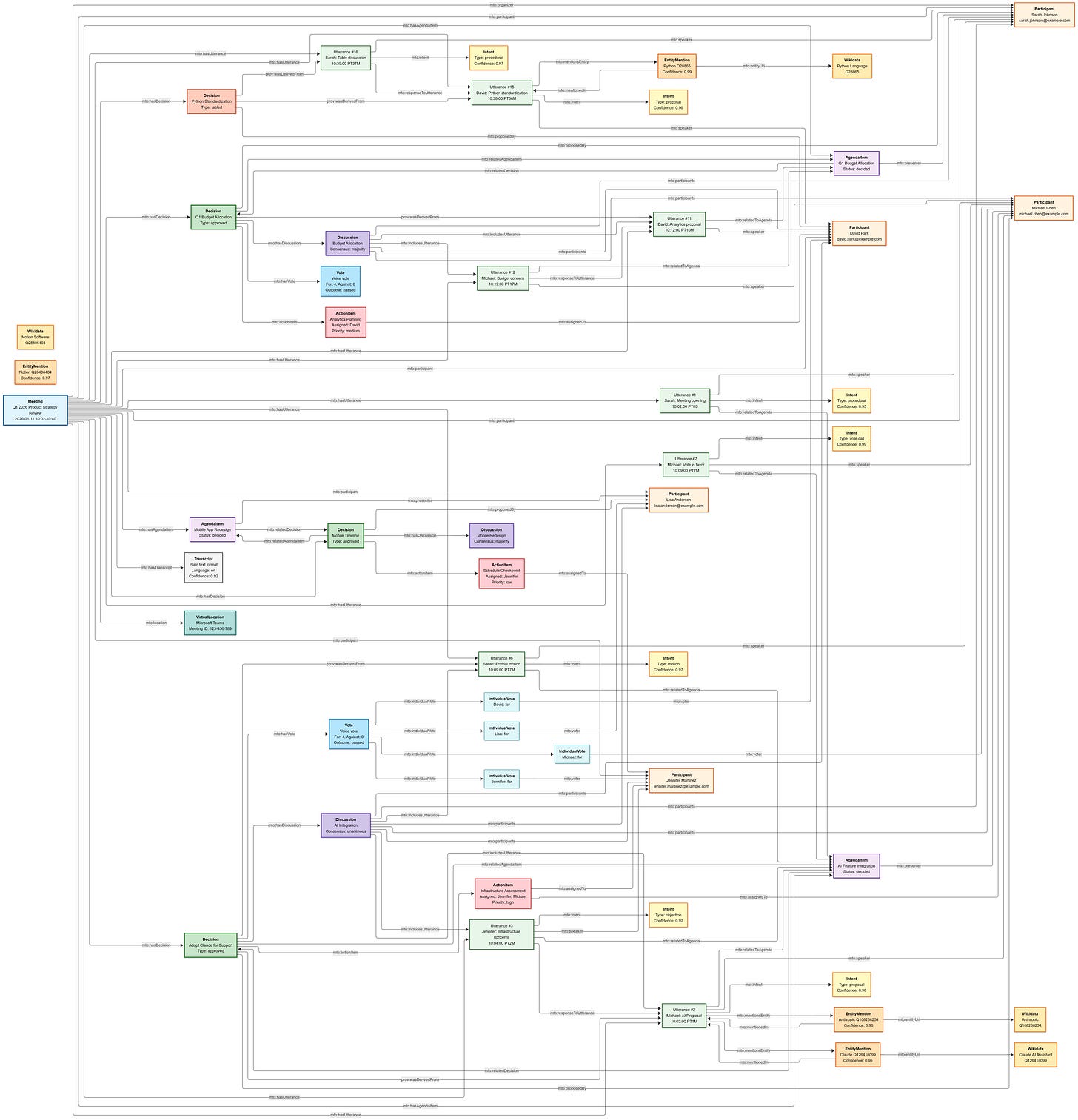

# More content in the above link.This produces a graph that looks like the following (click for full-size):

Once the TTL file is generated you can use it to provide a summary of what happened at the meeting, using the prompt:

Given the transcript TTL file, provide a summary of the meeting.Meeting Summary: Q1 2026 Product Strategy Review (Claude)

Meeting ID: meeting-20260111-product-strategy

Date: Saturday, January 11, 2026

Time: 10:02 AM - 10:40 AM PST (38 minutes)

Location: Microsoft Teams (Meeting ID: 123-456-789)

Organized by: Sarah Johnson (VP of Product)

Attendees (5)

Sarah Johnson (sarah.johnson@example.com) - VP of Product, Meeting Chair

Michael Chen (michael.chen@example.com) - Senior Product Manager

Jennifer Martinez (jennifer.martinez@example.com) - Engineering Lead

David Park (david.park@example.com) - Data Scientist

Lisa Anderson (lisa.anderson@example.com) - UX Designer

Agenda Items

1. AI Feature Integration Proposal (10:03 - 10:11 AM)

Presenter: Michael Chen

Topic: Evaluation and decision on adopting Claude by Anthropic for customer support automation

2. Q1 Budget Allocation (10:11 - 10:24 AM)

Presenter: Sarah Johnson

Topic: Allocation of $250,000 across three initiatives

3. Mobile App Redesign Timeline (10:24 - 10:37 AM)

Presenter: Lisa Anderson

Topic: Timeline and phased approach for mobile application redesign

Key Decisions

Decision 1: AI Customer Support Adoption ✅ APPROVED UNANIMOUSLY

Title: Adopt Claude for Customer Support Automation

Proposed by: Michael Chen

Vote: 4-0-0 (For-Against-Abstain) - PASSED

What was decided:

Adopt Claude by Anthropic for customer support automation

Implement proper disclosure to users about AI interaction

Provide human escalation options

Jennifer’s team to assess infrastructure requirements within 2 weeks

Rationale:

Claude provides superior response quality at a reasonable cost

Estimated cost: $8,000-$15,000/month (30% less than current human support costs)

Infrastructure capacity confirmed: 500 API calls/minute within Anthropic’s rate limits

Ethical considerations are addressed through disclosure and escalation options

Discussion Summary: The team unanimously agreed after addressing infrastructure concerns (Jennifer Martinez), technical feasibility (David Park confirmed capacity), and ethical considerations (Lisa Anderson raised disclosure requirements).

Decision 2: Q1 Budget Allocation ✅ APPROVED

Title: Q1 Budget Allocation

Proposed by: Sarah Johnson

Vote: 4-0-0 (For-Against-Abstain) - PASSED

Budget Breakdown ($250,000 total):

$40,000 - Analytics Dashboard (Phase 1)

$85,000 - AI Integration

$105,000 - Mobile Redesign

$20,000 - Contingency Fund

Rationale:

Phased approach balances competing priorities

Analytics dashboard essential for measuring success of other initiatives

Contingency fund addresses typical budget overruns

Mobile budget constrained but achievable through feature prioritization and phased release

Discussion Summary: Budget allocated with phased approach for analytics dashboard, reduced AI integration budget, and constrained mobile redesign budget with contingency fund. Consensus reached on majority vote.

Decision 3: Mobile App Redesign Timeline ✅ APPROVED

Title: Mobile App Redesign Timeline Approval

Proposed by: Lisa Anderson

Decided: Approved by consensus (no formal vote)

Timeline:

Phase 1 (April 2026): Android launch

Phase 2 (June 2026): iOS launch

February 2026: Checkpoint meeting

Rationale:

Current app rating: 3.2 stars, hurting app store visibility

Competitors (Notion, Asana) being mentioned in negative reviews

Urgency justifies proceeding despite budget constraints

Phased approach allows feedback incorporation between Android and iOS releases

Discussion Summary: Approved phased approach with Android launch in April and iOS in June, with February checkpoint meeting scheduled. Team reached majority consensus.

Decision 4: Python Standardization ⏸️ TABLED

Title: Python Standardization Proposal

Proposed by: David Park

Status: TABLED for future discussion

Proposal: Standardize on Python for all data science work (currently mix of R and Python causing maintenance issues)

Reason for Tabling: Time constraints prevented discussion. To be added to next meeting agenda.

Action Items

High Priority

AI Infrastructure Assessment

Assigned to: Jennifer Martinez, Michael Chen

Due: January 25, 2026

Description: Assess and document infrastructure requirements for Claude AI integration

Medium Priority

Begin Phase 1 Analytics Dashboard Planning

Assigned to: David Park

Due: January 18, 2026

Description: Start planning for analytics dashboard implementation

Low Priority

Schedule February Checkpoint Meeting

Assigned to: Jennifer Martinez

Due: January 15, 2026

Description: Schedule checkpoint meeting for mobile redesign progress review

Key Discussion Themes

1. AI Integration (Unanimous Consensus)

Objections raised: Infrastructure capacity concerns (Jennifer)

Addressed by: Technical analysis showing adequate capacity (David)

Additional concerns: Ethical AI disclosure (Lisa)

Resolution: Conditions added for disclosure and human escalation

2. Budget Allocation (Majority Consensus)

Tension: Competing priorities for limited budget

Initial proposal: $80,000 for analytics dashboard (David)

Concern: Dashboard not customer-facing (Michael)

Counter-argument: Essential for measuring success (David)

Resolution: Phased approach at $40,000 for Phase 1

3. Mobile Redesign (Majority Consensus)

Urgency driver: Poor app store ratings affecting visibility

Competitive pressure: Notion and Asana mentioned in reviews

Solution: Phased rollout starting with Android

Entity References (Wikidata Links)

The meeting discussion included references to:

Claude (Q126418099) - AI Assistant by Anthropic

Anthropic (Q108266254) - AI Safety company

Apache Superset (Q110283261) - Data visualization tool

Tableau (Q7672640) - Business intelligence software

Notion (Q28406404) - Productivity software (competitor)

Asana (Q4807245) - Work management platform (competitor)

Python (Q28865) - Programming language

R (Q206904) - Statistical programming language

Meeting Effectiveness Metrics

Duration: 38 minutes (scheduled 60 minutes - 37% under time)

Decisions Made: 3 approved, 1 tabled

Voting Records: 2 formal votes, both passed unanimously

Participation: 100% attendance, all 5 participants engaged

Action Items: 3 assigned with clear owners and deadlines

Consensus Level: 2 unanimous, 1 majority

Summary

The Q1 2026 Product Strategy Review was a highly productive meeting that addressed three critical strategic initiatives. The team unanimously approved the adoption of Claude AI for customer support automation with appropriate safeguards, allocated a $250,000 Q1 budget across competing priorities using a phased approach, and approved an aggressive mobile app redesign timeline to address competitive pressures.

Key themes included balancing innovation with infrastructure readiness, ensuring ethical AI practices, and managing constrained resources across multiple high-priority initiatives. All decisions included clear action items with assigned owners and deadlines.

One additional proposal (Python standardization) was tabled due to time constraints and will be addressed in a future meeting.

Next Steps

By Jan 15: Schedule February checkpoint meeting (Jennifer)

By Jan 18: Begin analytics dashboard planning (David)

By Jan 25: Complete AI infrastructure assessment (Jennifer, Michael)

February: Hold checkpoint meeting on mobile redesign progress

April: Launch Android mobile app redesign

June: Launch iOS mobile app redesign

This summary was automatically generated from the structured RDF meeting transcript using the Meeting Transcript Ontology.

Analysis (Mine)

There are several key points that are worth discussing here:

The semantic identifiers used in the transcript are not necessarily directly mappable to existing identifiers. This is why I ensured email identifiers for participants and used an existing public taxonomy (WikiData) for key named entity resolution.

Some post-processing using either SHACL properties or SPARQL Update may be useful for linking other entities to internal system identifiers. In general, you’ll likely reference an existing project by its number in the transcript, which can then correspond to an internal project number or ID. A lesson here - use RDF primarily to give you structure, but if you have known internal identifiers, they can often help tie resources together via MDM.

Utterances have a sequence number and multiple timestamps, which provide a mechanism for displaying them in the order in which they occurred.

Additionally, utterances can be correlated with both external topics and, intriguingly, intent, with a certain degree of confidence.

While not shown here, this can be correlated with Jira or a similar tracking system by passing in data (spreadsheets) that allow for correlation between internal tracking numbers and IRIs. This can result in somewhat indirect references, but these can then be used by inferencing calls in SPARQL to convert text references into IRI references.

This can also be used in conjunction with tools such as Notion, Conferences, and Microsoft Teams to handle slash commands that could, in turn, be added to the transcript and subsequently interpreted by the LLM. This isn’t in the ontology at the moment, but could be added readily enough.

The SHACL ontology is a baby ontology - it was generated based on a description with no real review (though it’s not bad for what it is), and would probably be best used in conjunction with a much more comprehensive context graph ontology. However, it’s also worth noting that the total time to create the project was under an hour, with much of that being taken by my analysis here.

Creating a context graph requires moving beyond treating your knowledge graph as a static entity and viewing it as a means to connect all interactions with process entities within your system, from initial meeting decisions through to the final process. You can integrate this with GitHub or similar repository systems so that every meeting, every JIRA ticket created, modified, or closed, and every chat-based discussion can be linked to a dynamic knowledge graph and become an operational component of your data infrastructure.

This is important because, all too often, the impetus for provenance is backward and is tied primarily to known data systems that don’t tell you much. On the other hand, context graphs provide a means, using established tools and standards, to understand where the data came from and why this data source was utilised, ultimately grounded in an utterance in a meeting room, either in person or via teleconferencing.

One additional point: Turtle is an LLM superfood, especially when coupled with an ontology and a taxonomy that structure the RDF and relate it to commonly agreed-upon terms. This can be seen in the comprehensive output that Claude in this case was able to generate solely based upon the RDF without going into the LLM for detailed metadata. This could readily be extended by the LLM to provide even more information in the output, but by mapping to RDF at the outset, you provide a much greater degree of integrity in the information you provide.

Auditor Questions & Answers

One additional benefit to this approach - you do not necessarily need to store your data in triple stores to gain the benefit of semantic reasoning. For instance, I asked the simple prompt:

Given the SHACL and Turtle, provide three questions of the meeting and give answers to those questions that may be asked by an auditor.This is the output for one of those questions:

Based on Meeting Transcript RDF Data (2026-01-11 Q1 Product Strategy Review)

Question 1: Budget Accountability and Justification

AUDITOR QUESTION:

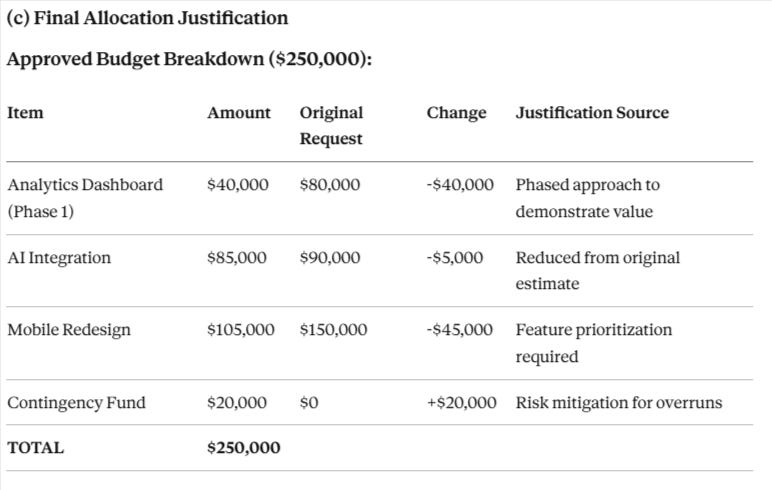

“The meeting approved a $250,000 budget allocation. Can you provide a complete audit trail showing: (a) who proposed the allocation, (b) what discussion occurred, (c) how the final amounts were justified, and (d) what accountability measures are in place?”

ANSWER (Based on RDF Data):

(a) Proposal Origin and Authority

Proposer:

Name: Sarah Johnson

Email: sarah.johnson@example.com

Title: VP of Product

Proposed At: 2026-01-11T10:11:00-08:00

Decision ID: dec-budget (mt:decision-budget-allocation)

RDF Evidence:

turtle

mt:decision-budget-allocation

mto:proposedBy mt:participant-sarah ;

mto:proposedAt "2026-01-11T10:11:00-08:00"^^xsd:dateTime .

mt:participant-sarah

foaf:name "Sarah Johnson" ;

foaf:mbox "sarah.johnson@example.com" ;

schema:jobTitle "VP of Product" .(b) Discussion Record and Deliberation

Discussion Details:

Discussion ID: disc-budget (mt:discussion-budget)

Start Time: 2026-01-11T10:11:00-08:00

End Time: 2026-01-11T10:24:00-08:00

Duration: 13 minutes

Consensus Level: Majority

Participants in Discussion:

David Park (david.park@example.com) - Data Scientist

Michael Chen (michael.chen@example.com) - Senior Product Manager

Jennifer Martinez (jennifer.martinez@example.com) - Engineering Lead

Sarah Johnson (sarah.johnson@example.com) - VP of Product

Key Utterances Contributing to Decision:

Utterance #11 (David Park - 10:12 AM):

"I'm requesting $80,000 for a comprehensive analytics dashboard that would

integrate with our data warehouse. This would use tools like Apache Superset

or Tableau for visualization. The dashboard would track key metrics including

user engagement, conversion rates, and feature adoption."Intent Type: proposal

Confidence: 0.98

Entities Mentioned: Apache Superset (Q110283261), Tableau (Q7672640)

Utterance #12 (Michael Chen - 10:19 AM):

"Could we potentially table the analytics dashboard and allocate that budget

to mobile? The dashboard, while useful, isn't customer-facing."Intent Type: amendment

Confidence: 0.90

Sentiment: neutral

Utterance #13 (David Park - 10:20 AM):

"I disagree strongly. Without proper analytics, we're flying blind. We won't

know if the AI integration or mobile redesign are actually successful. This

is a strategic investment in decision-making capability."Intent Type: objection

Confidence: 0.95

Sentiment: negative

Response To: Utterance #12

RDF Provenance Chain:

turtle

mt:decision-budget-allocation

prov:wasDerivedFrom mt:utterance-011, mt:utterance-013 ;

mto:hasDiscussion mt:discussion-budget .

mt:discussion-budget

mto:includesUtterance mt:utterance-011, mt:utterance-012, mt:utterance-013 ;

mto:consensus "majority" ;

mto:summary "Budget allocated with phased approach for analytics dashboard,

reduced AI integration budget, and constrained mobile redesign

budget with contingency fund." .Decision Rationale (from RDF):

turtle

mt:decision-budget-allocation

mto:rationale "Phased approach balances competing priorities. Analytics

dashboard essential for measuring success of other initiatives.

Contingency fund addresses typical budget overruns. Mobile

budget constrained but achievable through feature prioritization

and phased release." .Strategic Reasoning:

Analytics Dashboard Reduction: Changed to Phase 1 approach ($40K) to prove value before full investment

Contingency Fund Addition: Jennifer Martinez raised concerns about typical budget overruns

Mobile Constraints: Lisa Anderson acknowledged tight budget but confirmed achievable with phased release (Android first, then iOS)

(d) Accountability Measures

Formal Vote Record:

Vote Type: Voice vote

Conducted At: 2026-01-11T10:23:00-08:00

Result: 4 For, 0 Against, 0 Abstain

Outcome: PASSED

Individual Vote Records:

turtle

mt:vote-budget

mto:votesFor 4 ;

mto:votesAgainst 0 ;

mto:votesAbstain 0 ;

mto:outcome "passed" .

mt:individual-vote-005 mto:voter mt:participant-michael ; mto:voteValue "for" .

mt:individual-vote-006 mto:voter mt:participant-jennifer ; mto:voteValue "for" .

mt:individual-vote-007 mto:voter mt:participant-david ; mto:voteValue "for" .

mt:individual-vote-008 mto:voter mt:participant-lisa ; mto:voteValue "for" .Assigned Accountability:

Action Item: Begin Phase 1 Analytics Dashboard Planning

ID: action-analytics-plan

Assigned To: David Park (david.park@example.com)

Due Date: 2026-01-18T17:00:00-08:00

Priority: Medium

Status: Pending

turtle

mt:action-analytics-planning

dcterms:title "Begin Phase 1 Analytics Dashboard Planning" ;

mto:assignedTo mt:participant-david ;

mto:dueDate "2026-01-18T17:00:00-08:00"^^xsd:dateTime ;

mto:priority "medium" ;

mto:status "pending" .Related Decision Link:

turtle

mt:decision-budget-allocation

mto:actionItem mt:action-analytics-planning .AUDIT TRAIL SUMMARY:

✅ Complete provenance from proposal to vote

✅ All participants identified with email addresses

✅ Full discussion captured with timestamps and intents

✅ Unanimous approval with individual vote records

✅ Clear accountability with assigned owner and deadline

✅ Traceable rationale linked to specific utterances

Conclusion

The above output didn’t involve SPARQL - it simply took a graph, a schema, and a bit of a taxonomy, asked an intelligent question from the perspective of a certain role (I didn’t even write the question) and used the graph to create a very cogent response that requires precision, consistency, and logical coherence. As someone who has had to deal with data governance and fiscal auditing, this is nothing short of stunning.

I think that Context Graphs - using the processes of an organisation to drive not only data creation but to provide transparency into the decision making of an organisation - is a huge step forward in terms of both data governance and corporate governance. This is where I see LLMs shining - not as data providers but data transformers and interpreters, in ways that can then shape processes all the way from initial decision to final production, distribution, sales, and regulatory compliance.

One final rant. There is considerable discussion in the AI community about context graphs, which are being heralded as the next major opportunity for LLMs to provide a GPS-like capability for businesses. By itself, this is consultant-grade BS. LLMs are not databases - they are unreliable, error-prone, processing-intensive, can’t be easily updated, and are highly ambiguous. This is true for any CNN or RNN. LLMs are effective, as I’ve demonstrated, at analysing patterns. Yes, absolutely use them in that context, but the graph itself should be purely deterministic, and that’s not going to happen with LLMs. Period.

In Media Res,

Check out my LinkedIn newsletter, The Cagle Report.

I am also currently seeking new projects or work opportunities. If anyone is looking for a CTO or Director-level AI/Ontologist, please get in touch with me through my Calendly:

If you want to shoot the breeze or have a cup of virtual coffee, I have a Calendly account at https://calendly.com/theCagleReport. I am available for consulting and full-time work as an ontologist, AI/Knowledge Graph guru, and coffee maker. Also, for those of you whom I have promised follow-up material, it’s coming; I’ve been dealing with health issues of late.

I’ve created a Ko-fi account for voluntary contributions, either one-time or ongoing, or you can subscribe directly to The Ontologist. If you value my articles, technical pieces, or general reflections on work in the 21st century, please consider contributing to support my work and allow me to continue writing.

Very Interesting read! I was following the "operational context graph" narrative that was beginning to form possibly in addition to the existing "semantic context graph", and one of the arguments was that a new "operational context graph" layer was needed pushed by Foundation's article: https://foundationcapital.com/context-graphs-ais-trillion-dollar-opportunity/

Juan over at Dataworld/ServiceNow seemed to take a position, which I am apt to agree, that this probably wasn't a new category but that Enterprise's already had these platforms and much of that operational context would be natively provided by them via logs/transcripts etc.

It seems that Kurt's position mirror's Juan's in that all that operational context exists in these platforms (i.e. meetings transcripts in zoom etc) with the caveat it should be converted into rdf via ontology+shacl into a deterministic graph if you are going to have this idea of some governed cross system decision trace. Or in other words, this "operational context graph" isn't new but applying semantic engineering to new sources is new and AI can help structure this.